I recently attended a talk by Hannah Goodwin on the fragmentation, alteration, and deletion of female bodies in digital media. I found her approach to deletion compelling, and was inspired to revisit an old project of mine that used and altered found images. For that project I wrote a script to gather images from the now-defunct Mass Traveler webcams, and created an algorithm to blend multiple frames together over time.

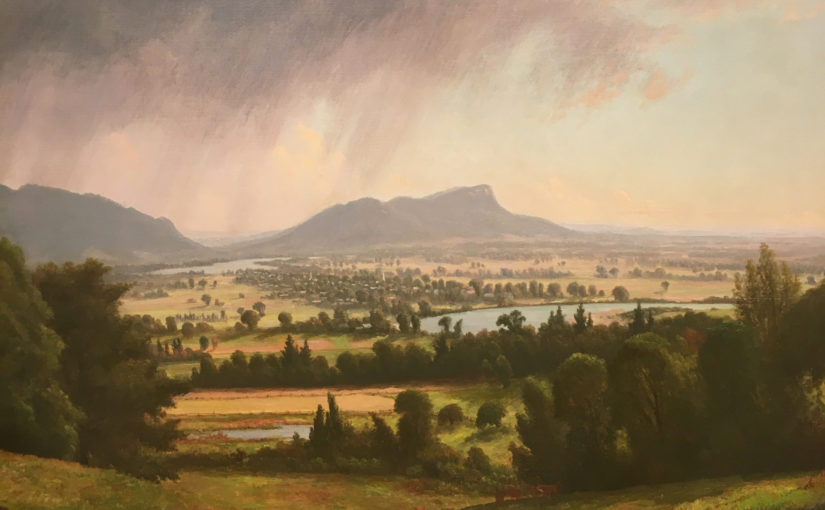

The technique has the effect of hiding almost all individual movement, much as Louis Daguerre’s 1839 photographic image, Boulevard du Temple, erases everything moving except for the shoe shiner and their customer. In my case, the traffic camera’s purpose is inverted to create a landscape that is devoid of traffic. Daylight scenes become surreal as the familiar bridge appears strangely unpopulated. As evening falls, the cars paint in light on wet pavement.

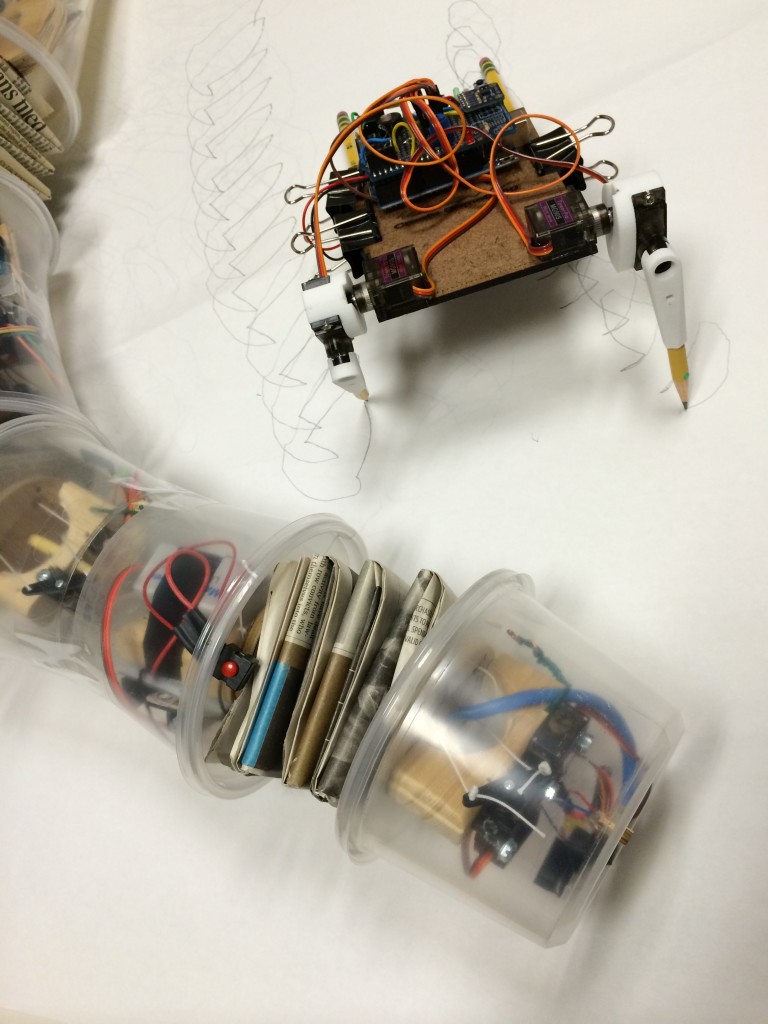

The theme of erasure is relevant to this moment, as algorithms and artificial intelligence shape more and more of the images we see. To wit, my Google Clips arrived yesterday. It is a camera that decides for itself when to take little movies, looking especially for happy faces and pets. Whereas the technological (de)selection of subjects in work like Daguerre’s was somewhat arbitrary, it has now become driven by opaque algorithms.

I find it interesting that for all the high tech wizardry behind this new device, it still has a shutter button and a lens ring to turn. In mimicking older forms of technology, these elements make the device intelligible to people more familiar with “dumb” cameras. This also serves to lessen our apprehension as it gets to know our family and waits for us to smile.

Even with the safeguards that Google has built in, such as avoiding cloud processing or automatic uploads, Google Clips is not neutral. I began to feel uneasy as my daughters looked at this thing over the breakfast table. I don’t want to undermine their sense of sanctuary within our home, which we’ve cultivated by keeping out devices like Alexa. In family settings especially, this new tool could begin to erase a sense of the private, unobserved self for people who cannot give their consent. Whether that’s an inevitable consequence of an artificial photographer or just a possible pitfall of using it around children remains to be seen.